About the Video

This video is one of the sessions at “Financial APIs Workshop 2018”, held on July 24th, 2018 in Tokyo.

Justin Richer from Authlete talks about comparison of Authlete’s unique semi-hosted approach and traditional approaches for deploying OAuth infrastructure, and how Authlete has extended its client authentication functions and supported mutual TLS to implement Financial-grade API (FAPI).

You can download the slide from here.

Transcript

Preface

Justin: Hi everybody. My name is Justin Richer. I’ve been working with Authlete for about the past year or so.

One of the projects we’ve been doing recently has been enhancing the Authlete server, the authorization server, to support the FAPI protocol.

Now I’ll give you guys a little bit of background into kind of how the Authlete system works because it is fairly different from a lot of other implementations.

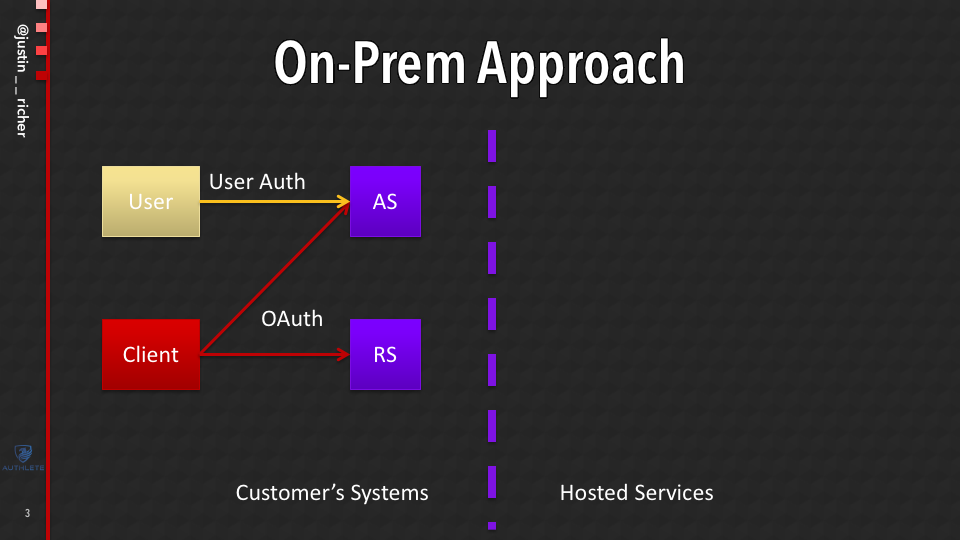

And when you’re going out to build an OAuth infrastructure, traditionally you’ve got one of two major choices.

On-Prem Approach

You can host everything on-premises and you can build up your server and have everything inside their security domain. And these days of course on-premises might be in a cloud that you control or something like that. The on-premises that we have today aren’t the same as what we used to have.

But really this is all talking about kind of what’s within your control. You run your authorization server, your resource server and all of your user accounts everything talks together.

This is the model that OAuth was really built in. This is the world that OAuth comes from this kind of, you know, I can control a whole stack, I know where everything is.

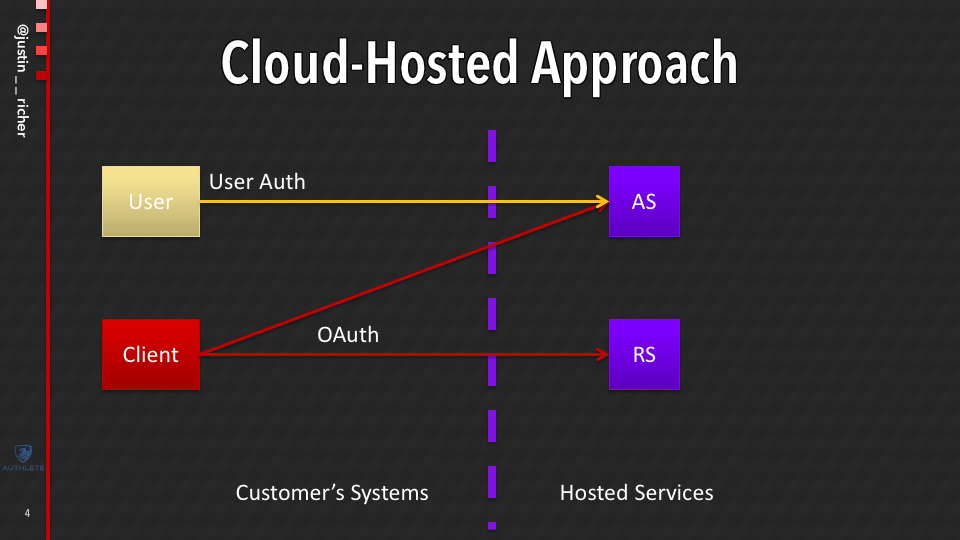

Cloud-Hosted Approach

These days though, you can get a lot of things offered as a service and you can get OAuth as a service from a lot of different providers out there.

There are a lot of different companies that will be happy to sell you something that basically they run the authorization server. So you send your users out there, they authenticate over there and you come back with tokens and then that calls APIs for you.

All that stuff comes together both.

What if there’s another option? Because there are benefits and drawbacks about both of these.

And one of the things that really makes people bit nervous with is Cloud hosted pattern is, I’m sending my users over there and sending my credentials outside and stuff like that.

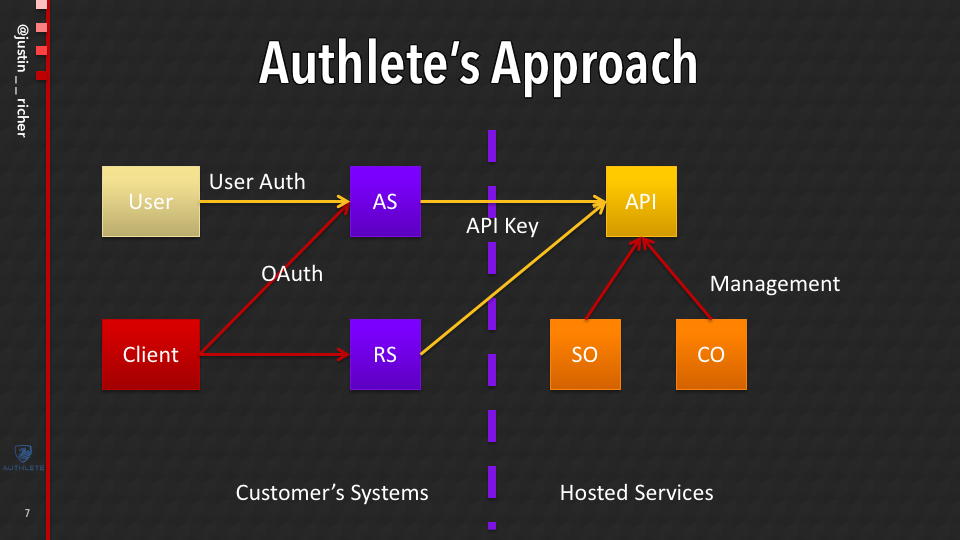

Authlete’s Approach is a Semi-hosted Approach

The Authlete’s approach is a semi-hosted approach. Which means that, there’s an API that allows you to build out in OAuth-compliant system.

In other words, there’s an API that your software that you host goes and calls. So the OAuth processing happens within your control, but then it goes and calls the Authlete API and it says what do I do next.

Adding New Features to Authlete

Why is this real relevant and important to the world of FAPI?

With this approach, much like with a cloud hosted service, we can add new features to the (Authlete) API system and then make them available to people who are building up their systems without them having the changes do anything.

So, a good example of this is when we added PKCE support for mobile applications into the Authlete server. Our customers could then just make use of it. They could just start sending code challenges and code verifiers and it would just work in their systems without them having to go and upgrade their authorization service to support all of this.

Because the Authlete API would tell them what to do with these new things.

New Features for FAPI

So that brings us to what needed to be changed for FAPI. Now FAPI and Open Banking, they’re going to be merging in the near future. They both add a few different requirements that the Authlete server did not support out of the box.

More advanced client authentication model. It’s gonna be required mutual TLS client certificates.

Scope management for how do you know dealing with the read requests versus read/write requests, potentially other APIs and profiles and there’s also some processing of request objects.

I’m gonna take a few minutes to talk about how we implemented the first two. And Hide (Hideki Ikeda) is going to come in and talk about the second two and wrap up the latter part of this presentation.

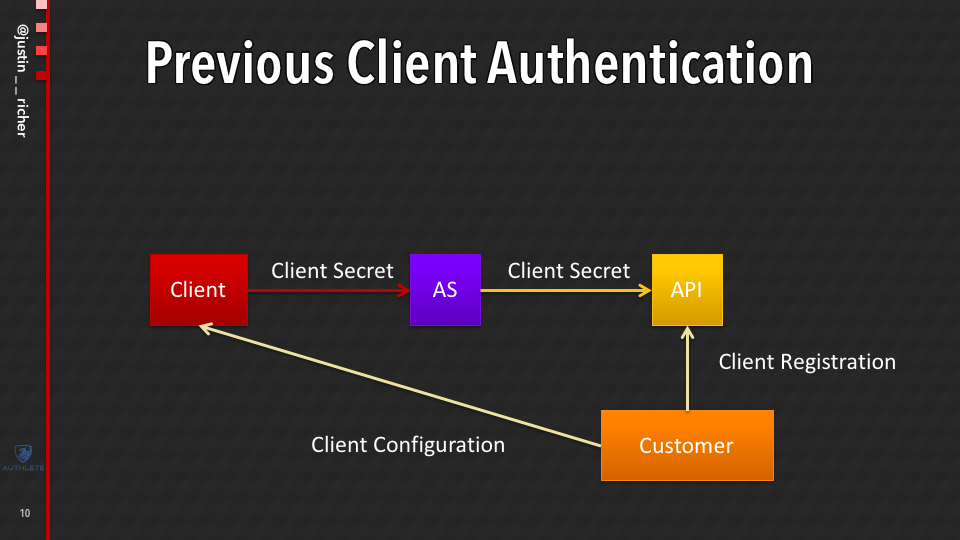

Previous Client Authentication in Authlete

So previously in Authlete, this is how client authentication works.

The customer shows up. They talk to the API through the management consoles and stuff like that. And they register the client and they get the client configuration which includes a secret. And they hand that to the client software.

Then hands it to the AS (Authorization Server) during the OAuth transaction. And the AS doesn’t actually know. Remember this is running all on the customer systems. The AS doesn’t actually know anything about client secrets. It goes and asks the Authlete API, “Is this secret any good?” Now for client secrets, it’s a shared secret.

This model makes a lot of sense. It makes really simple for developers of the AS. People who want to deploy this to be able to say, like, “I just got a request. What’s the next step what do I do?”

In that way, when we do rotation, when we want to enforce stricter client secrets and things like that, we can do that at the API side and the AS benefits from that without having to change its code.

This would be a great form except that Authlete, as it was written before we started this, only knew how to deal with client secrets, which meant that all of our customer ASes (Authorization Servers) only knew how to deal with client secrets.

Now this itself is not really surprising. This isn’t far away from the most common way for OAuth clients to authenticate to an authorization server out there. This is, if you’re going out and authenticating to different systems, this is how things run. So it made a lot of sense that a lot of other OAuth implementations do that.

But in order to support FAPI and Open Banking, we needed more.

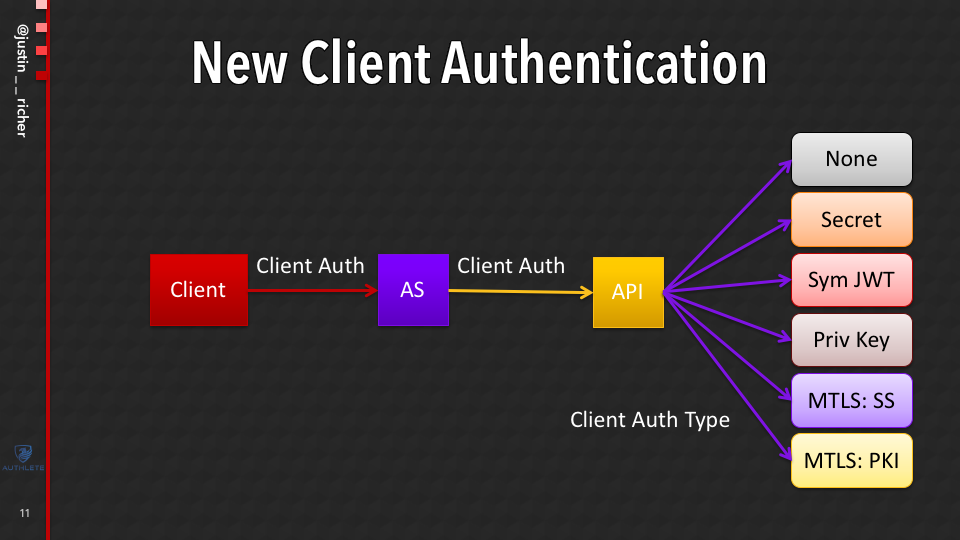

New Client Authentication Mechanism

So we needed to be able to have this call come into the API and figure out how are you doing the public client, how are you doing client secrets, how are you doing symmetrically signed JWTs, asymmetrically signed JWTs, how are you doing mutual TLS, either the self-signed certificate or PKI.

But here’s the cool thing.

When we went to go and implement this in the Authlete API, it took us a fair bit of reengineering. On the API side, in order to figure out how to support all of these different methods, make sure all the keys were registered to the right places, do all of the security checks and things like that.

But now all of the downstream systems that are calling the Authlete API get to just use this.

Because it turns out that in order for the API to process that the AS needs to send all of that client authentication, but since this is an OAuth transaction, what that AS has always been doing is it sends over the authorization header and it sends over the list of parameters that were posted to the token endpoint and then it figures out what do I need to do next.

Which means that, for all authorization servers that are connected into the API, with this new functionality deployed, they can just start deploying clients and registering clients that have symmetric or asymmetric keys for example, or mutual TLS or things like that.

Because in doing this, the authorization server is still going to just take that entire request, pass it over to the server and it’s going to say, “For this particular client, what is the correct key and the signature validator? Does this request make any sense? Is the client allowed to do all of this?” All of that type of processing is happening over here.

And this required, like I said a lot of reengineering on our side on the API, which is kind of magically flows downstream.

How Traditional MTLS Works

Then there’s the problem of mutual TLS.

Mutual TLS with the Authlete model gets really weird, really fast.

And this comes about as you think about how mutual TLS was intended to work. You go through the TLS specifications and a lot of stuff that’s written about TLS.

It’s all about one computer talking to another computer, and then the computer that is talking to has some root authority that it checks to make sure that your certificate validates and you are the correct party and you go on your way. That’s how TLS is meant to work.

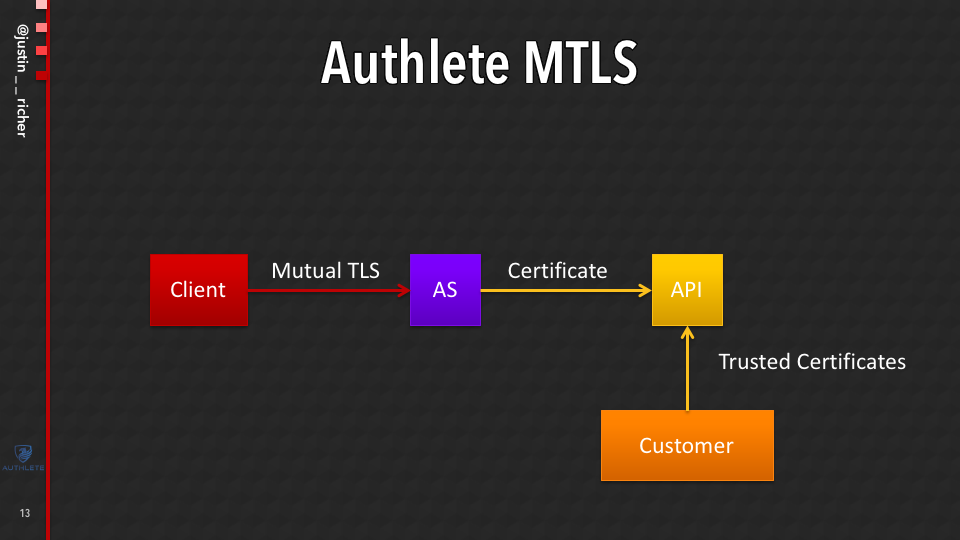

Mutual TLS in the Authlete Model

But remember in the Authlete model. We’re talking to an API. So what do we do for mutual TLS in the Authlete model?

The client is going to do mutual TLS authentication to the authorization server, then the authorization server is basically just going to say, “Hey here’s the certificate that I was presented as part of that incoming thing. I’m gonna hand that over to the API.”

And then the API is effectively gonna be acting as my certificate authority. It’s going to figure out “does this certificate make sense for this client for this context.”

Now here’s what I find particularly interesting about this model.

Like I said, TLS assumes that it’s one computer talking to another. In today’s environment, with cloud services and microservices and Docker containers and stuff like that. What does computer even mean anymore?

We’ve got one system talking to another system. Chances are you’re going through a TLS terminator, reverse proxies, API gateways and a whole stack of other things.

This notion of, we’ve got, point-to-point encryption and it’s fully authenticated and fully validated in both directions, it doesn’t really match the deployment model of computers that we have today.

So, the kinds of things that we’re doing here, out of the box from a TLS perspective, it looks kind of weird. But I would argue that’s because TLS is all slow at keeping up with how computers are being deployed these days.

Customer’s Authorization Server

So like I said, what we do this set is, on this link (between a client and an AS), we do the mutual TLS and we validate that TLS socket.

But we don’t authenticate the certificate itself. We will accept any certificate on that connection. We don’t care whom it’s signed by, it could be self signed, you don’t even check that. We turn all of that off.

And then we turn around and we handed that to the authorization server now we make sure that the certificate that’s being presented is the one that’s being used in the TLS channel.

But what we don’t do is make sure that it is signed by a CA that we trust or anything like that we offload all of that to the Authlete API.

Authlete API

Now the Authlete API itself can’t validate this TLS connection. It has no insight into that.

That’s the entire purpose of using TLS is that people outside of that point-to-point connection can’t see anything about it.

But what it can say is, that whatever the customer’s AS says that was the certificate that was used, I’m gonna make sure the best type to the correct client, that it has the correct rights to it, that is associated with all the right accounts, and it makes sense for this transaction.

So if I have a client that is registered for a PKI based MTLS, which means that the Authlete API is gonna have a root certificate, and they present me a self-signed cert, the (Authlete) API is going to reject that and it’s going to tell the AS to reject that call, even though it is a valid TLS connection as far as the AS is concerned, the OAuth transaction then gets rejected.

Conclusion

This model we’re seeing more and more as we go to more microservice and API proxy and cloud-based providers and things like that. Anyway, this is how TLS is really getting used in the real world. That’s what I think is really kind of exciting about this.

It’s kind of an example of how the world is putting how we look at TLS and the roots of trust across all of these different peering services.